Clemens Eppner

ceppner at nvidia dot com

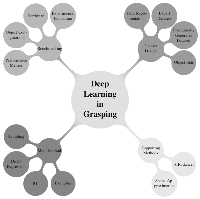

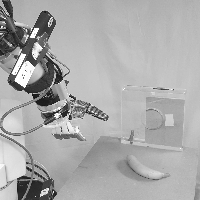

I am a Research Scientist in the Seattle Robotics Lab at NVIDIA Research, led by Dieter Fox. My focus is on grasping and manipulation, including planning, control, and perception. Despite the apparent specificity of this problem domain, I believe that the hybrid systems nature of grasping and manipulation extends to a wide range of decision-making problems. Furthermore, I consider robotics to be a fundamentally empirical enterprise. Thus, building systems that alter our physical world is integral to my work.

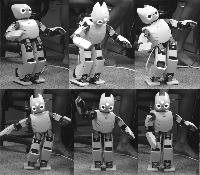

Before joining NVIDIA, I received my Ph.D. at the Robotics and Biology Lab at TU Berlin under the supervision of Oliver Brock. While studying at the University of Freiburg, I wrote my Master's thesis at the Autonomous Intelligent Systems lab headed by Wolfram Burgard and worked at Sven Behnke's Humanoid Robots Group. I also enjoyed a research stay at Pieter Abbeel's Robot Learning Lab at UC Berkeley.